Eric DeGrass

June 17th, 2022

It all started in January of 2018. My team and I had just completed the build-out of our third datacenter in 12 months. We had just begun moving production workloads when a VMware NSX bug brought everything crashing down. For the next 22 hours we worked to restore operations.

That was only the beginning of a very painful year. Over the next several months, we suffered additional datacenter outages due to the likes of a NetApp QoS bug, a Cisco ASA bug, and others.

I spoke with industry peers and heard similar horror stories. It was comforting to hear that I was not alone, but... was this just how it had to be? All I could think was:

"Are all IT Operations professionals doomed to suffer like this during their careers?"

Over the next few months, I was spending a considerable amount of time searching for solutions. I asked several industry colleagues what they were doing to mitigate this risk and found no answers. By August, I realized there was no product available that would help prevent 2AM datacenter outages.

So, I decided to build one. My team and I cobbled together a semi-automated process that was better than the current, manual industry-standard processes.

After the initial outages, Cisco offered to set us up with a Technical Account Manager. They would pick up the phone and call someone when they released a new bug. But how was this any better than getting more emails in our inboxes?

My team were some of the best and brightest, but like many IT teams, we were understaffed relative to the expectations of the business. This issue was too complex for humans to solve without help. They needed something more than spreadsheets and an email inbox to manage the thousands of different risks.

"There had to be a better way."

Finally, in late 2018 at the Gartner Infrastructure and Operations conference, I had a light-bulb moment. Everyone I spoke to had similar stories to mine. When I described what we had recently built, nearly everyone remarked how much better our solution was compared to the status quo.

"I came to the realization that I could help others avoid the pain I had experienced."

For decades, we’ve had monitoring tools tell us when a hard drive fails or a service in an OS stops working. Why don’t we have a monitoring tool that tells us when the software running the storage or database is broken? The forums have postings about such bugs, but how do you even manage forums?

During my following research and conversations with industry veterans, some folks would give me a puzzled look and ask “Is this just a CVE data feed?” I had to explain that these bugs were not security vulnerabilities, and weren’t in any data feed that uses the National Vulnerability Database (NVD) or CVE framework. They focus on the Availability and Integrity elements of the Information Security Triad, while CVEs relate to Confidentiality.

Since 2018, I’ve been very fortunate to speak with many IT colleagues to perfect our platform, BugZero. I am proud that BugZero is a solution built by IT, for IT. We know security concerns are vitally important to businesses and have built security and compliance at the core of our platform.

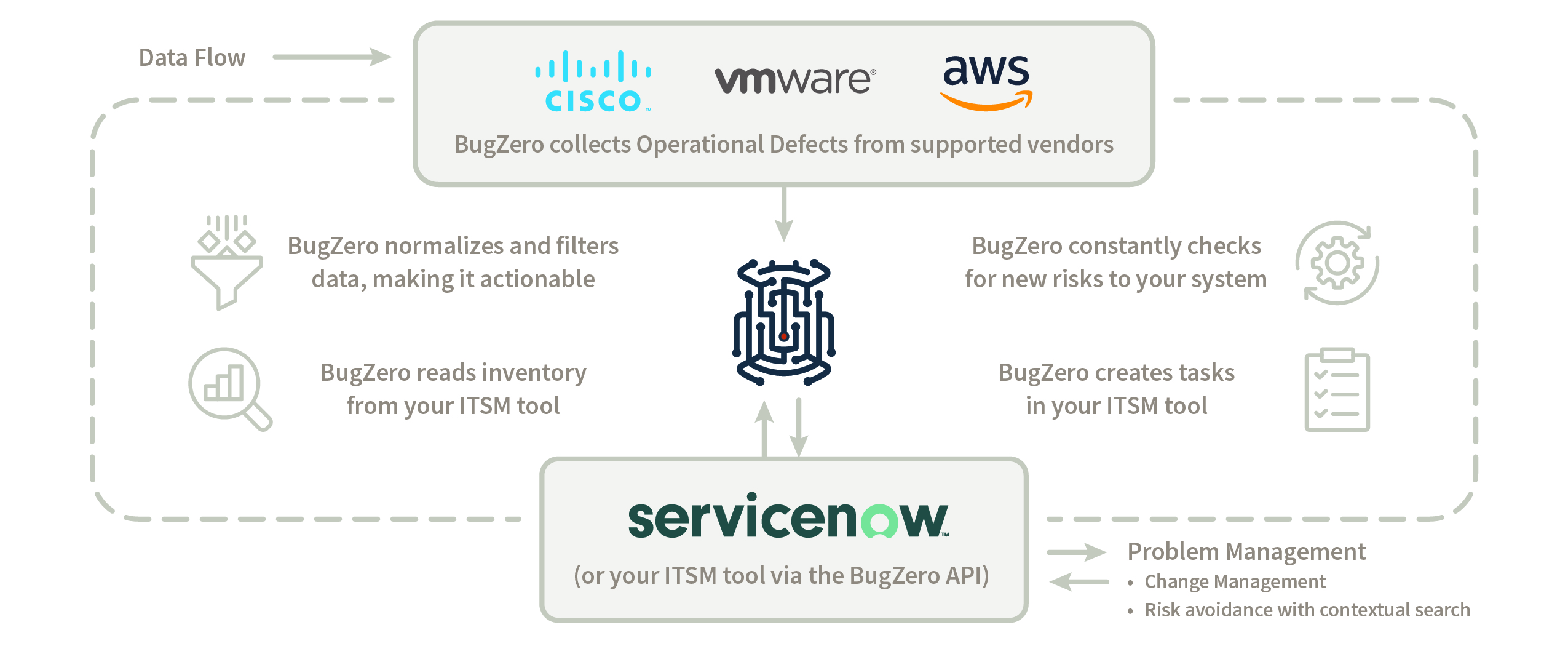

Fast forward to today, and our list of mission-critical IT vendor integrations is at double-digits and counting. These integrations report only the risks that are currently present in your environment. We call them operational defects, but others call them stability bugs, functional bugs, etc. You can learn more about how BugZero works here.

"Our vision is to help IT teams be more proactive, increase uptime, and ultimately have a better work/life balance than is possible today."

Why doesn’t IT Ops have the same level of rigor that SecOps has for CVEs? With BugZero, we’re taking that first step in the right direction to reduce the cost of downtime. Year over year, companies are becoming more reliant on IT, and software is becoming more complex. It’s time to use software to fix software.

It’s time for BugZero.

- Eric DeGrass, Founder of BugZero

*Editor’s Note: This letter has been revised and updated.

Eric DeGrass

November 1st, 2023

Miles Lancaster

November 1st, 2023

Miles Lancaster

September 20th, 2023

Sign up to receive a monthly email with stories and guidance on getting proactive with vendor risk

BugZero requires your corporate email address to provide you with updates and insights about the BugZero solution, Operational Defect Database (ODD), and other IT Operational Resilience matters. As fellow IT people, we hate spam too. We prioritize the security of your personal information and will only reach out only once a month with pertinent and valuable content.

You may unsubscribe from these communications at anytime. For information on how to unsubscribe, as well as our privacy practices and commitment to protecting your privacy, check out our Privacy Policy.